Galileo Research

Pushing the envelope on GenAI evaluation

Our research team works on state of the art metrics, methodologies and benchmarks to advance trustworthy generative AI adoption

Areas of Research

Galileo is committed to helping customers productionize trustworthy, high-performance, cost-effective AI solutions. Our research team works tirelessly to help teams overcome their biggest hurdles when it comes to generative AI evaluation, experimentation, monitoring, and protection.

Evaluation Efficiency

Luna™: Evaluation Foundation Models

Finally move away from asking GPT and human vibe checks to evaluate model responses. The Luna family of Evaluation Foundation Models can be used to evaluate hallucinations, security threats, data privacy checks, and more.

Best-in-Class accuracy

Results for Context Adherence EFM (detects hallucinations in RAG based systems). Based on popular public datasets across industries.

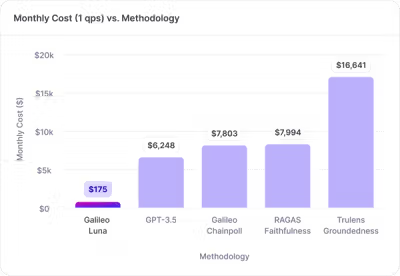

Ultra Low-Cost

Experiment conditions: 1 QPS traffic volume; 4k input token length; using Nvidia L4 GPU

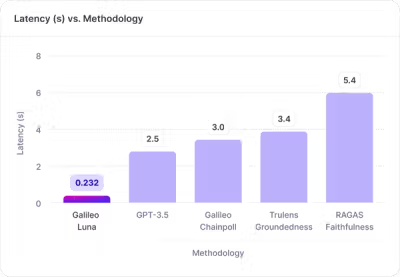

Millisecond Latency

Experiment Conditions: Assuming 4k input token length; using Nvidia L4 GPU

Private

A major bottleneck to evaluations is the need for a test set. Creating a high-quality test set is often labor-intensive and expensive, typically requiring the involvement of human experts or GPT models.

With Galileo Luna, you do not need for ground truth test sets. To do this, we pre-trained EFMs on evaluation-specific datasets across a variety of domains. This innovation not only saves teams considerable time and resources, but also reduces cost significantly. The Luna EFMs power a host of evaluation tasks. Visit our docs to read more about each of these evaluation tasks.

Customizable

Even with 75%+ (industry-leading) accuracy rates, some use cases need much higher precision auto evaluation. For instance, when working with an AI team at a large pharma organization working on new drug discovery, it was clear that the cost of potential hallucinations would be catastrophic to their chances for the drug passing the next phase of clinical trials. Moreover, it was extremely hard to get expert humans to evaluate the model response. Therefore, Galileo’s Luna EFM needed to provide 95%+ accuracy.

To accomplish this, we fine-tuned the client’s EFMs to their specific needs. Every Luna model can be quickly fine-tuned with customers’ data in their cloud using Galileo’s Fine Tune product to provide an ultra-high accuracy custom evaluation model within minutes!

Innovations Under the Hood

Purpose-Built Small Language Models for Enterprise Evaluation Tasks:

- Problem: As the size of language models (LLMs) continues to increase, their deployment becomes more computationally and financially demanding, which is not always necessary for specific evaluation tasks.

- Solution: We tailored multi-headed Small Language Models to precisely meet the needs of bespoke evaluation tasks, focusing on optimizing them for specific evaluation criteria rather than general application.

- Benefit: This customization allows our Evaluation Foundation Models (EFMs) to excel in designated use cases, delivering evaluations that are not only faster and more accurate but also more cost-effective at scale.

Intelligent Chunking Approach for Better Context Length Adaptability:

- Problem: Traditional methods split long inputs into short pieces for processing, which can separate related content across different segments, hampering effective hallucination detection.

- Solution: We employ a dynamic windowing technique that separately splits both the input context and the response into, allowing our model to process every pair and combination of context and response chunks.

- Benefit: This method ensures comprehensive validation, as every part of the response is checked against the entire context, significantly improving hallucination detection accuracy.

Multi-task Training for Enhanced Model Calibration:

- Problem: Traditional single-task training methods can lead to models that excel in one evaluation task but underperform in others.

- Solution: Luna EFMs can conduct multiple evaluations—adherence, utilization, and relevance—using a single input, thanks to multi-task training.

- Benefit: By doing this, when evaluations are being generated, EFMs can “share” insights and predictions with one another, leading to more robust and accurate evaluations.

Data Augmentation for Support Across a Broad Swath of Use Cases:

- Problem: Evaluations are not one-size-fits-all. Evaluation requirements in financial services vary significantly from evaluation requirements in consumer goods. Limited data diversity can restrict a model’s ability to generalize across different use cases.

- Solution: Each Luna EFM has been trained on large high-quality datasets that span industries and use cases. We enrich our training dataset with both synthetic data generated by LLMs for better domain coverage and data augmentations that mimic transformations used in computer vision.

- Benefit: These strategies make each model more robust and flexible, making them more effective and reliable in real-world applications.

Token Level Evaluation for Enhanced Explainability:

- Problem: Standard approaches may not effectively pinpoint or explain where hallucinations occur within responses.

- Solution: Our model classifies each sentence as adherent or non-adherent by comparing it against every piece of the context, ensuring a piece of context supports the response part.

- Benefit: This granularity allows us to show users exactly which parts of the response are hallucinated, enhancing transparency and the utility of model outputs.

Learn More

Hallucination Detection

Chainpoll: A High Efficacy Method for LLM Hallucination Detection

A high accuracy methodology for hallucination detection that provides an 85% correlation with human feedback - your first line of defense when evaluating model outputs.

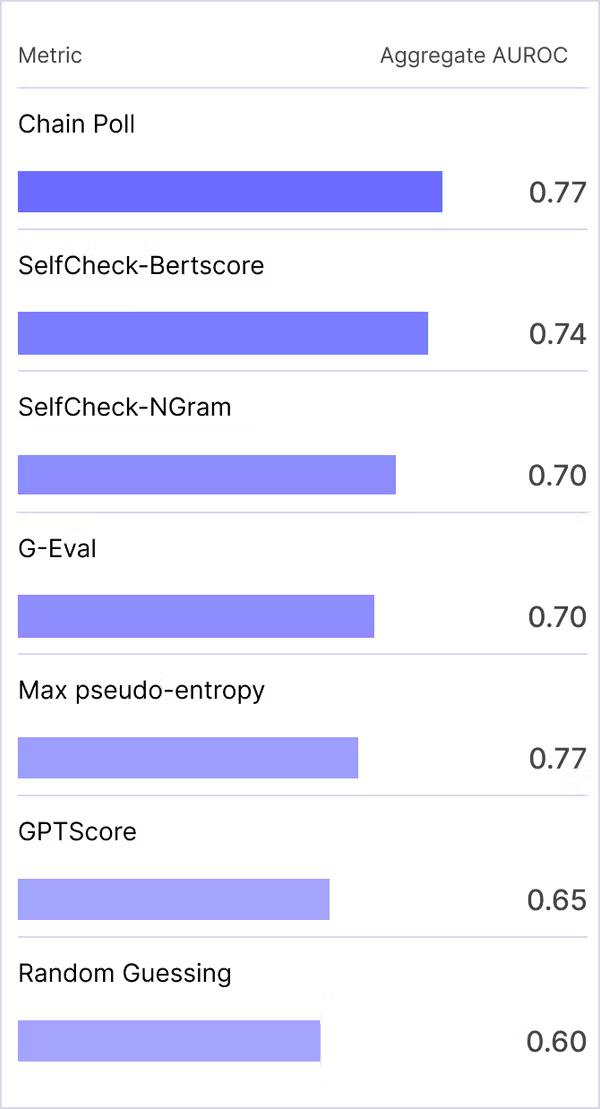

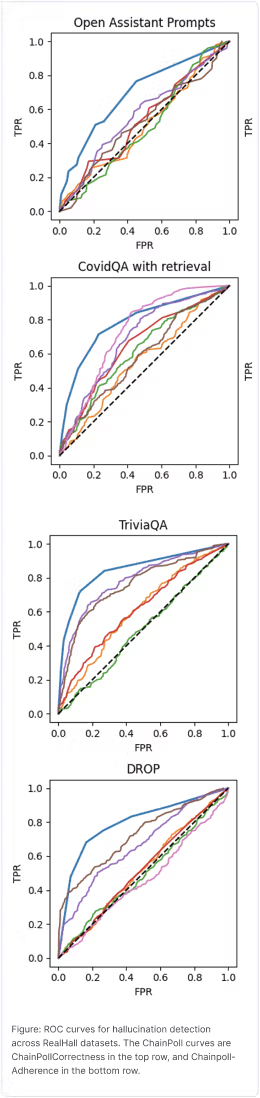

-ChainPoll: a novel approach to hallucination detection that is substantially more accurate than any metric we’ve encountered in the academic literature. Across a diverse range of benchmark tasks, the ChainPoll outperforms all other methods – in most cases, by a huge margin.

- ChainPoll dramatically out-performs a range of published alternatives – including SelfCheckGPT, GPTScore, G-Eval, and TRUE – in a head-to-head comparison on RealHall.

- ChainPoll is also faster and more cost-effective than most of the metrics listed above.

- Unlike all other methods considered here, ChainPoll also provides human-readable verbal justifications for the judgments

- it makes, via the chain-of-thought text produced during inference.

- Though much of the research literature concentrates on the the easier case of closed-domain hallucination detection, we show that ChainPoll is equally strong when detecting either open-domain or closed domain hallucinations. We develop versions of ChainPoll specialized to each of these cases: ChainPoll-Correctness for open-domain and ChainPoll-Adherence for closed-domain.

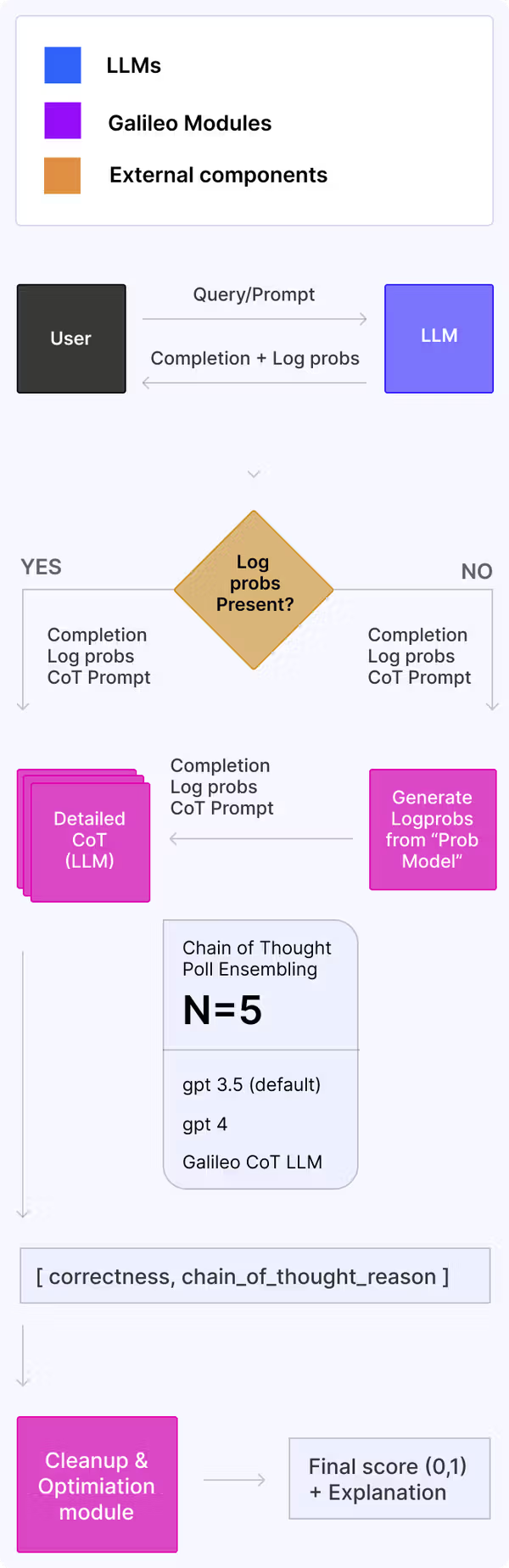

How does this work?

Chainpoll piggybacks on the strong reasoning power of your LLMs, but further leverages a chain of thought technique to poll the model multiple times to judge the correctness of the response. This technique not only provides a metric to quantify the degree of potential hallucinations, but also provides an explanation based on the context provided, in the case of RAG based systems.

Learn More

Al System Diagnostics

Powerful RAG Analytics

Get quantitative, unique, action-oriented auto-insights about your RAG system. Optimize your chunking strategy, the quality of your retriever and the adherence of the output to the context you provided.

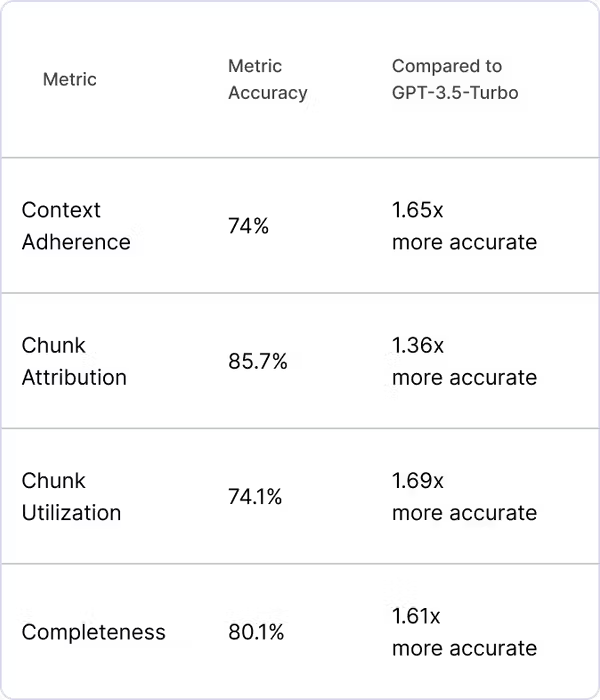

Galileo’s RAG analytics facilitate faster and smarter development by providing detailed RAG evaluation metrics with unmatched visibility. Our four cutting edge metrics help AI builders optimize and evaluate both the LLM and Retriever sides of their RAG systems.

- Chunk Attribution: A chunk-level boolean metric that measures whether a ‘chunk’ was used to compose the response.

- Chunk Utilization: A chunk-level float metric that measures how much of the chunk text that was used to compose the response.

- Completeness: A response-level metric measuring how much of the context provided was used to generate a response

- Context Adherence: A response-level metric that measures whether the output of the LLM adheres to (or is grounded in) the provided context.

How does this work?

Extending our findings from Chainpoll, we make extensive use of GPT-4 as an annotator, and use GPT-4 annotations as a reference against which to compare each metric we develop.

- While GPT-4 produces high-quality results, using GPT-4 to compute metrics would be prohibitively slow and expensive for our users.

- Hence, we focus on building metrics that use more cost-effective models under the hood, viewing GPT-4 results as a quality bar to shoot for. The ideal, here, would be GPT-4-quality results, offered up with the cost and speed of GPT-3.5-like models.

- While we benchmark all metrics against GPT-4, we also supplement these evals where possible with other, complementary ways of assessing whether our metrics do what we want them to do.

To create new metrics, we design new prompts that can be used with ChainPoll.

- We evaluate many different potential prompts, evaluating them against GPT-4 and other sources of information, before settling on one to release.

- We also experiment with different ways of eliciting and aggregating results -- i.e. with variants on the basic ChainPoll recipe, tailored to the needs of each metric.

- For example, we've developed a novel evaluation methodology for RAG Attribution metrics, which involves "counterfactual" responses generated without access to some of the original chunks. We'll present this methodology in our forthcoming paper.

High Quality Fine-Tuning

Data Error Potential

The Galileo Data Error Potential (DEP) score has been built to provide a per sample holistic data quality score to identify samples in the dataset contributing to low or high model performance i.e. ‘pulling’ the model up or down respectively. In other words, the DEP score measures the potential for "misfit" of an observation to the given model.

Categorization of "misfit" data samples includes:

- Mislabelled samples (annotation mistakes)

- Boundary samples or overlapping classes

- Outlier samples or Anomalies

- Noisy Input

- Misclassified samples

- Other errors

This sub-categorization is crucial as different dataset actions are required for each category of errors. For example, one can augment the dataset with samples similar to boundary samples to improve classification.

How does this work?

DEP Score Calculation

The base calculation behind the DEP score is a hybrid ‘Area Under Margin’ (AUM) mechanism. AUM is the cross-epoch average of the model uncertainty for each data sample (calculated as the difference between the ground truth confidence and the maximum confidence on a non ground truth label).

AUM = p(y*) - p(ymax)y^max!=y*

We then dynamically leverage K-Distinct Neighbors, IH Metrics (multiple weak learners) and Energy Functions on Logits, to clearly separate out annotator mistakes from samples that are confusing to the model or are outliers and noise. The 'dynamic' element comes from the fact that DEP takes into account the level of class imbalance, variability etc to cater to the nuances of each dataset.

DEP Score Efficacy

To measure the efficacy of the DEP score, we performed experiments on a public dataset and induced varying degrees of noise. We observed that unlike Confidence scores, the DEP score was successfully able to separate bad data (red) from the good (green). This demonstrates true data-centricity (model independence) of Galileo’s DEP score. Below are results from experiments on the public Banking Intent dataset. The dotted lines indicate a dynamic thresholding value (adapting to each dataset) that segments noisy (red) and clean (green) samples of the dataset.

Learn More

Powerful Metrics

Prompt and Response Quality

Security Threat Detection

Data Privacy

Standard Metric