Model Insights

gemini-1.5-flash-001

Details

Developer

License

NA (private model)

Model parameters

NA (private model)

Supported context length

2000k

Price for prompt token

$0.075/Million tokens

Price for response token

$0.15/Million tokens

Model Performance Across Task-Types

Chainpoll Score

Short Context

0.94

Medium Context

1

Long Context

0.92

Model Insights Across Task-Types

Digging deeper, here’s a look how gemini-1.5-flash-001 performed across specific datasets

Short Context RAG

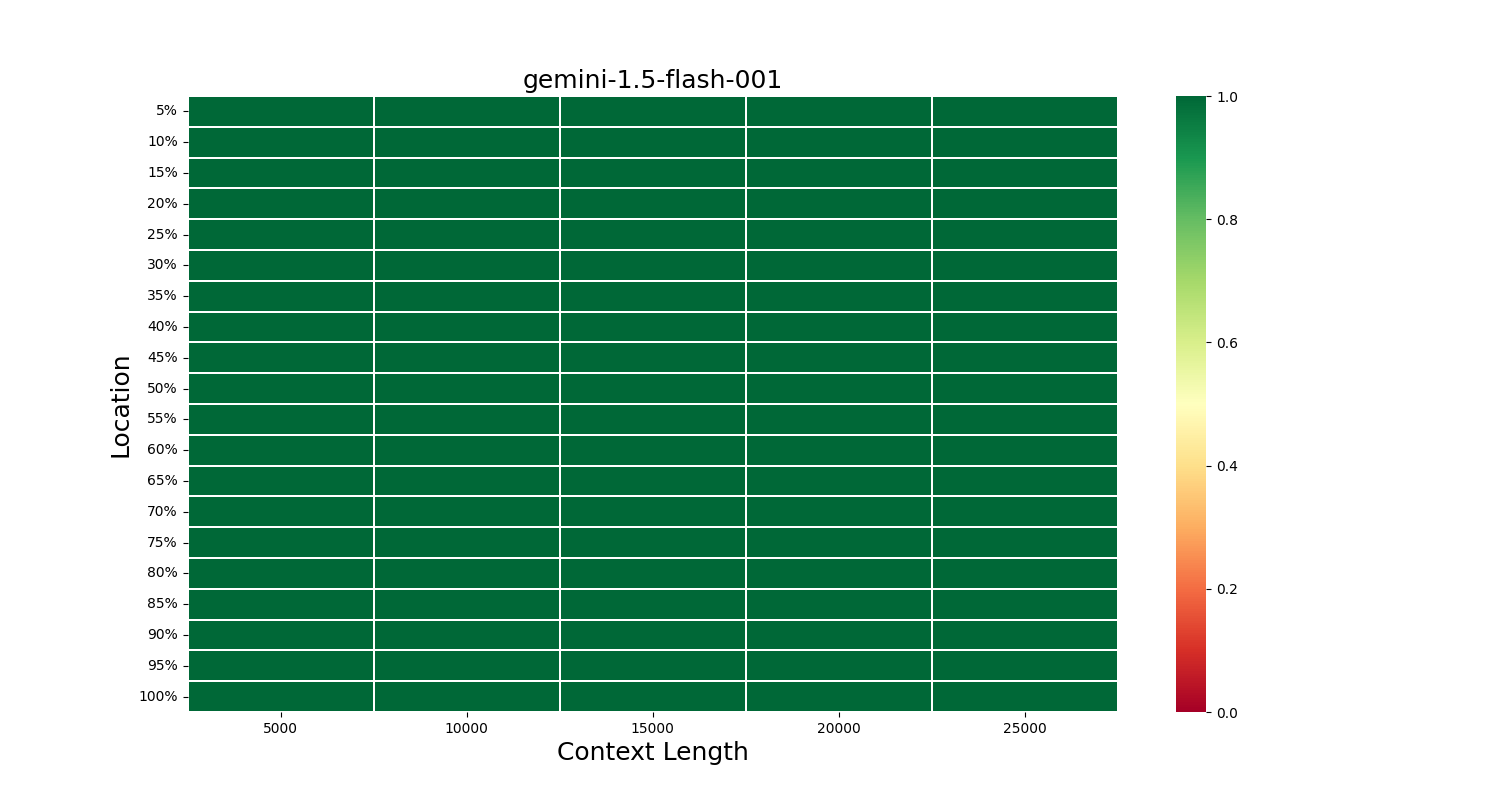

Medium Context RAG

This heatmap indicates the model's success in recalling information at different locations in the context. Green signifies success, while red indicates failure.

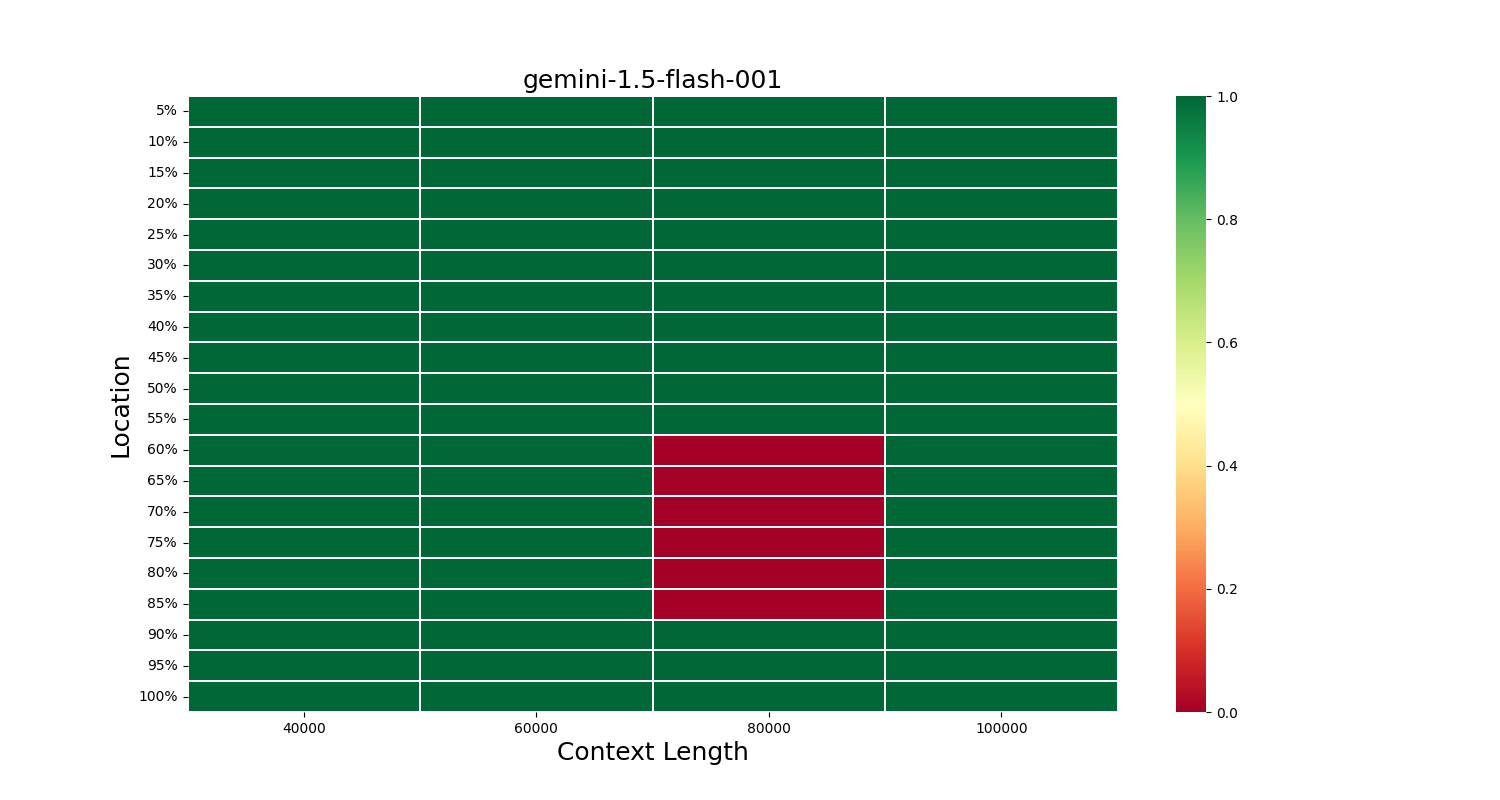

Long Context RAG

This heatmap indicates the model's success in recalling information at different locations in the context. Green signifies success, while red indicates failure.

Performance Summary

| Tasks | Task insight | Cost insight | Dataset | Context adherence | Avg response length |

|---|---|---|---|---|---|

| Short context RAG | The model demonstrates exceptional reasoning and comprehension skills, excelling at short context RAG. It shows good mathematical proficiency, as evidenced by its performance on DROP and ConvFinQA benchmarks. It comes out as one of the best small closed source model ahead of Haiku. | One of the most affordable closed source model with best in class performance. It is nearly 10x cheaper than Sonnet 3.5 and 50% cheaper than Llama-3-70b. We highly recommend it. | Drop | 0.92 | 303 |

| Hotpot | 0.92 | 303 | |||

| MS Marco | 0.95 | 303 | |||

| ConvFinQA | 0.98 | 303 | |||

| Medium context RAG | Flawless performance making it suitable for any context length upto 25000 tokens. | We recommend this model for this task due to perfect score and lowest price. | Medium context RAG | 1.00 | 303 |

| Long context RAG | Great performance overall but does not work well for 80000 context length. | Great cost effective model for its performance range. We recommend using Claude 3.5 Sonnet for best performance. | Long context RAG | 0.92 | 303 |