Model Insights

mistral-7b-instruct-v0.3

Details

Developer

mistral

License

Apache 2.0

Model parameters

7b

Supported context length

32k

Price for prompt token

$0.2/Million tokens

Price for response token

$0.2/Million tokens

Model Performance Across Task-Types

Chainpoll Score

Short Context

0.78

Medium Context

0.94

Model Insights Across Task-Types

Digging deeper, here’s a look how mistral-7b-instruct-v0.3 performed across specific datasets

Short Context RAG

Medium Context RAG

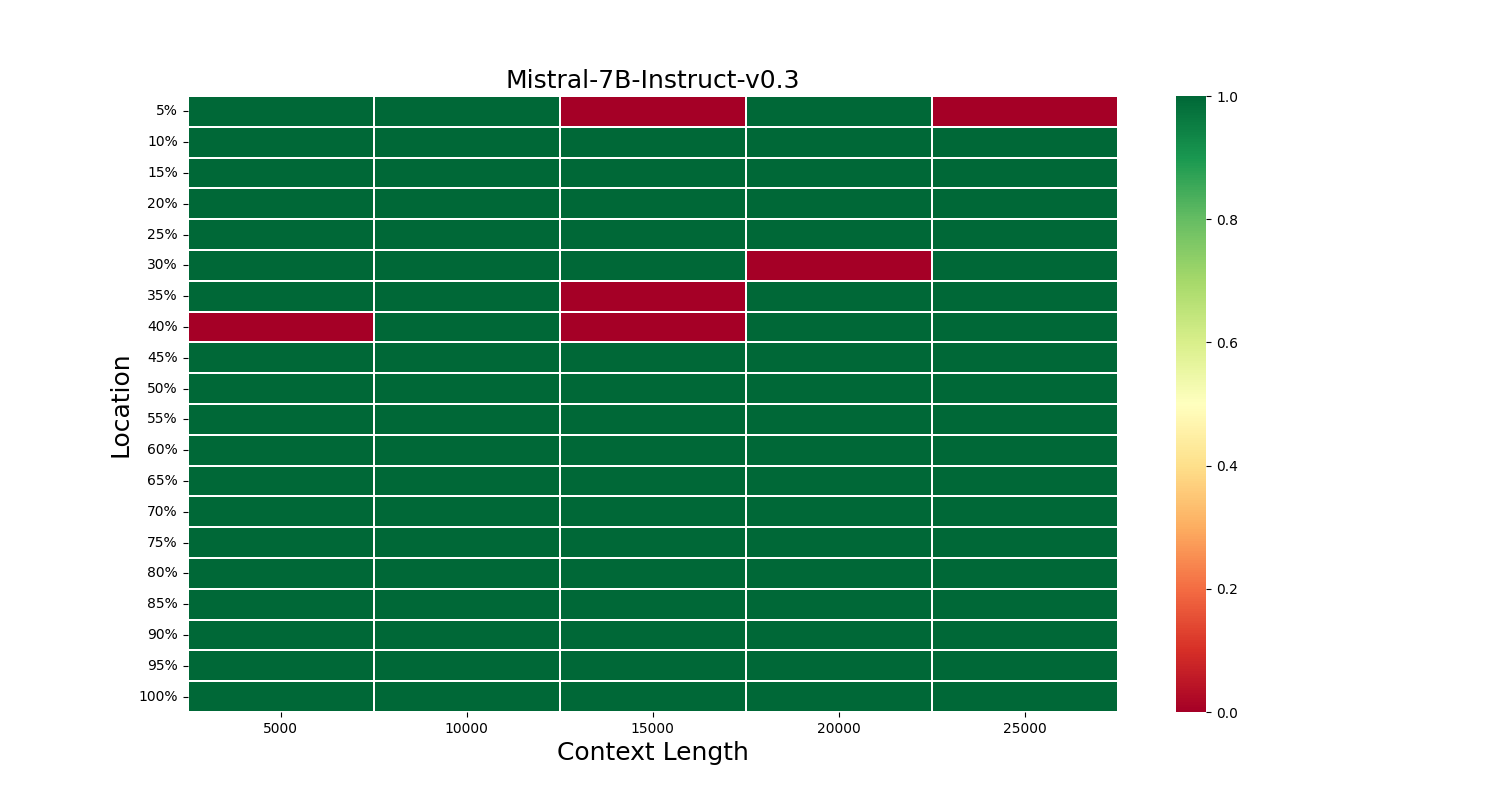

This heatmap indicates the model's success in recalling information at different locations in the context. Green signifies success, while red indicates failure.

Performance Summary

| Tasks | Task insight | Cost insight | Dataset | Context adherence | Avg response length |

|---|---|---|---|---|---|

| Short context RAG | The model struggles with reasoning and comprehension skills at short context RAG. It shows poor mathematical proficiency, as evidenced by its performance on DROP and ConvFinQA benchmarks. | We recommend using Llama-3-8b instead of this for same price. | Drop | 0.69 | 263 |

| Hotpot | 0.80 | 263 | |||

| MS Marco | 0.92 | 263 | |||

| ConvFinQA | 0.72 | 263 | |||

| Medium context RAG | Great powerformance overall with some degradation after context length of 10000 tokens. | Good performance but we recommed using 2x cheaper Gemini Flash for best results if you can use closed source models. | Medium context RAG | 0.94 | 263 |